Intel Optane NVMe Drives, 10Gtek U.2 Adapters, and PCIe Bifurcation

While recently building out my new home lab server, I had the opportunity to install several Intel Optane 905p U.2 NVMe drives. Because my motherboard did not natively support U.2 devices, I acquired multiple 10Gtek U.2 to PCIe adapters to connect 6 U.2 drives to the system. These adapters come in either PCIe 3.0 x16, PCIe 3.0 x8, or PCIe 3.0 x4, depending on the number of U.2 devices the adapter supports. In my situation, I purchased the following to support six devices:

- 10Gtek PCIe to SFF-8643 Adapter for U.2 SSD, X16, (4) SFF-8643

- 10Gtek PCIe to SFF-8643 Adapter for U.2 SSD, X8, (2) SFF-8643

When utilizing these adapters, your motherboard must support PCI bifurcation to divide the PCIe x16/x8 ports into multiple x4 PCIe channels. After chatting with a few folks, it seemed to be hit or miss as to whether they could get these 10Gtek cards to connect multiple U.2 drives to their servers. Below I discuss the issues I encountered and how I resolved them to allow my new server to access all six Intel Optane drives.

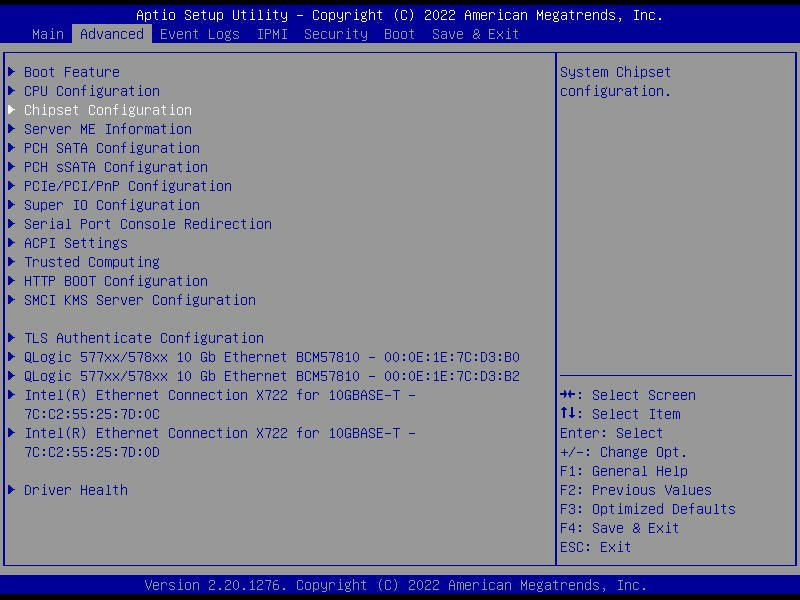

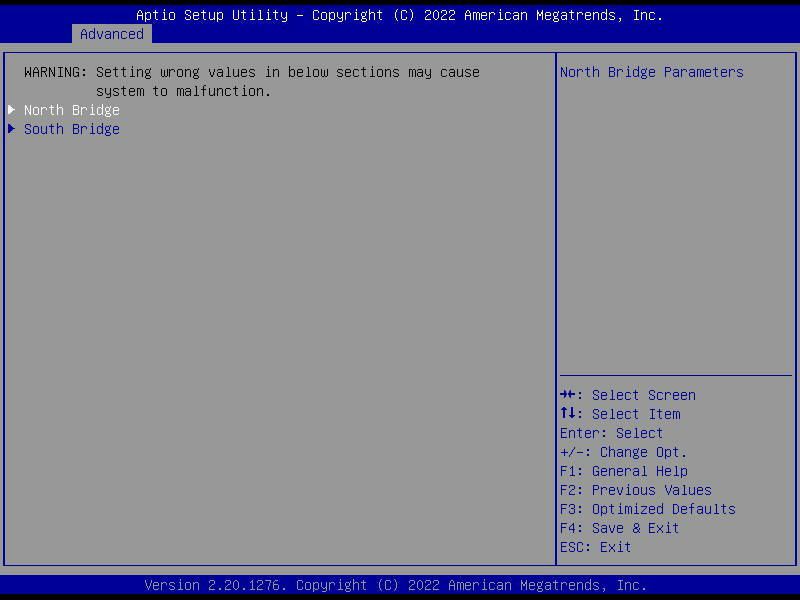

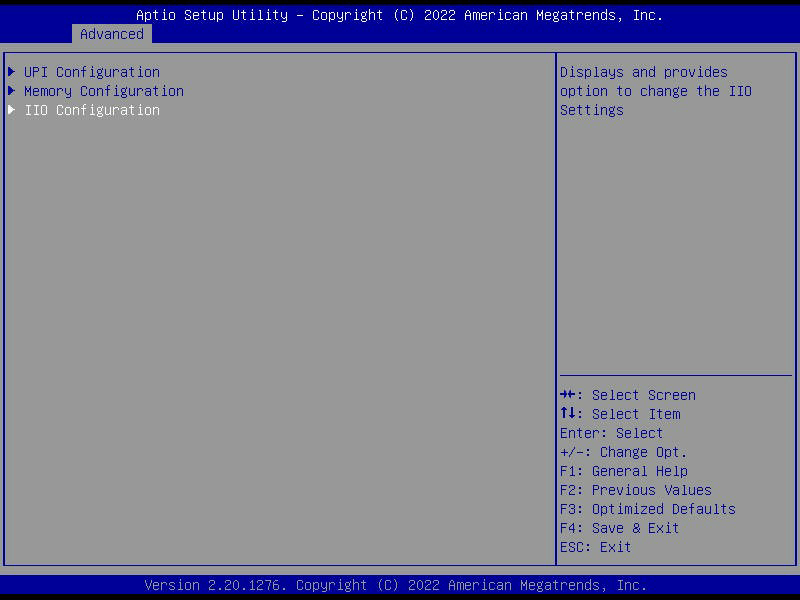

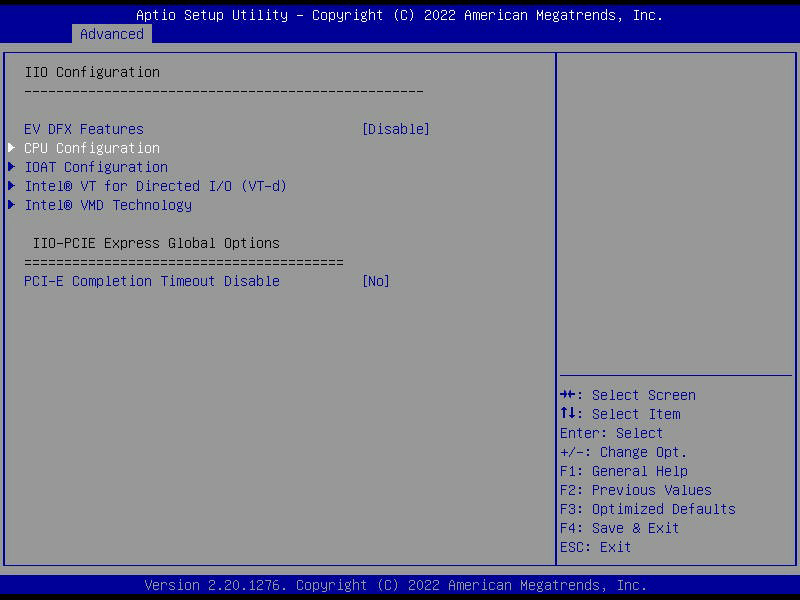

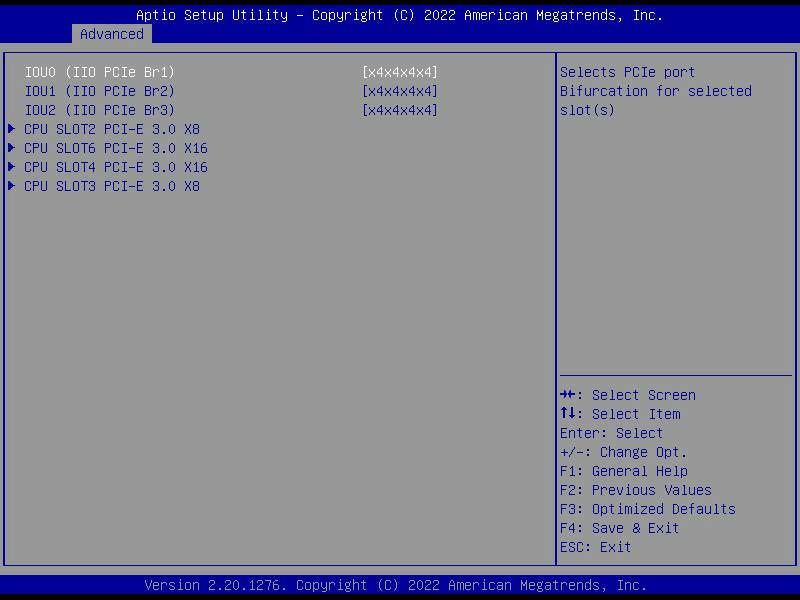

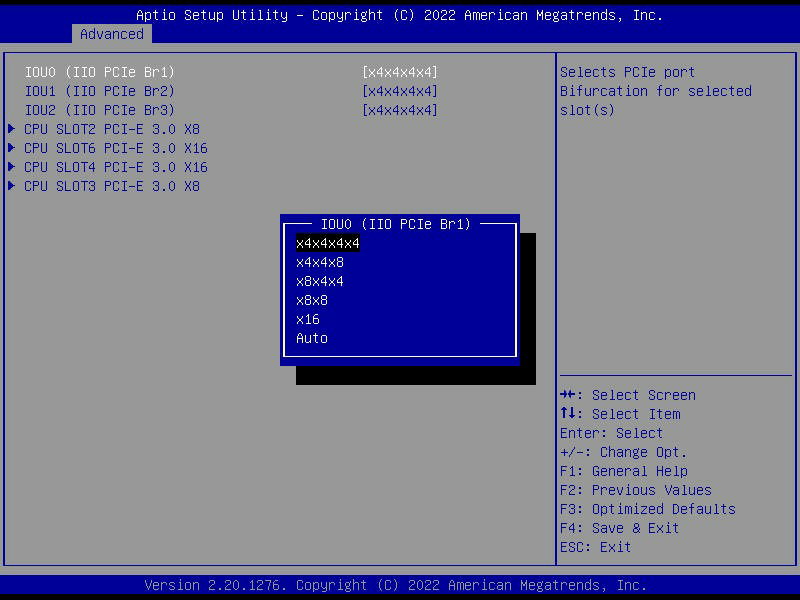

PCIe Bifurcation

In the case of my Supermicro X11SPI-TF Server Motherboard, the UEFI configuration is set to “automatic” by default. However, only one U.2 device was discovered from each adapter when installing the drives. I began digging into the UEFI settings to find the settings related to PCIe bifurcation and to determine the current and possible settings. The screenshots below show the process of changing the UEFI settin. In my case, I change all three PCIe groups to x4x4x4x4.

After updating these settings, additional drives were discovered, but not all. This situation had me quite stumped as it made no sense why one slot would work while the other did not. After reading the user documentation, I finally found the issue, which I’ll discuss next.

PCIe Slot Auto Switch

After enabling the x4x4x4x4 PCIe bifurcation, I began seeing four out of the six Intel Optane drives. This behavior had me quite stumped, as it seemed like the two-port 10Gtek U.2 adapter wasn’t working. I consulted the motherboard documentation while attempting to figure out why I could not see the two Intel Optane drives on the two-port 10Gtek U.2 adapter. I noticed within the specifications that it lists PCIe SLOT3 and SLOT4 as “x8/x16: Supports Auto Switch”. The motherboard’s online specifications also show the following:

- 1 PCIe 3.0 x16,

- 1 PCIe 3.0 x16 (x16 or x8 ),

- 1 PCIe 3.0 x8 (x0 or x8 ),

- 1 PCIe 3.0 x8,

- 1 PCIe 3.0 x4 (in x8 slot)

If the PCIe 3.0 x16 slot is populated with a PCIe x16 card, the other slot is disabled. If the PCIe x16 slot contained a PCIe x8 card, the other slot would support an additional PCIe 3.0 x8. With this information in mind, I moved the two-port 10Gtek to a different PCIe 3.0 x8 slot, and voila, the two other Intel Optane drives were visible within VMware ESXi.

Lessons Learned

After completing the above troubleshooting, I learned two lessons:

- Don’t assume that “auto” settings will always work regarding PCIe bifurcation.

- Pay attention to motherboard PCIe slot specifications to ensure that slots don’t change in function or capability based on other devices installed on the motherboard.

That’s all for now. Next on my agenda is installing VMware ESXi and building a new nested VMware vSphere virtualization environment on the new lab host.

See Also

- New Nested Virtualization Home Lab Server Build -

- Unable to Reuse vSAN Disks for New vSAN Cluster -

- TP-Link JetStream 8-Port 10GE SFP+ L2+ Managed Switch Review -

- DISA Releases VMware vSphere 8.0 STIG Version 1, Release 1 -

- VMware vSphere Virtual Machine Backups using Synology Active Backup for Business -

Search

Get Notified of Future Posts

Recent Posts