New Nested Virtualization Home Lab Server Build

Recently, I participated in a VMware vExpert program in partnership with Intel Corporation and coordinated by Matt Mancini and Simon Todd, where Intel offered to provide VMware vExperts with Intel Optane NVMe devices for use in our home labs. While I wasn’t initially selected to receive the hardware, there was a second chance opportunity to apply again after working on extending the reach of my social media presence. In addition to receiving Intel Optane NVMe devices during the second chance opportunity, Matt Mancini graciously donated several home lab devices as prizes for the top three individuals who grew their social media presence the most. After putting in a lot of work to expand my social media reach, I was selected for the first-place prize, an Intel Xeon Gold 6252 Processor.

While I initially did not have a use for the Intel Xeon Gold 6252 Processor, I decided I couldn’t let it go to waste. Thus began my journey to build a new server for my home lab that I could use to supplement my home lab’s capacity and test the Intel Optane NVMe drives I received. Since this would be a single server instead of a cluster, my primary goal with this build was to build a server that I could use to provide hosting for nested instances of VMware vSphere and other components. Nested virtualization would allow me to test things such as deploying VMware Cloud Foundation environments or deploying and testing a VMWare vSAN Express Storage Architecture (ESA) cluster.

Selecting Components

Because the Intel Xeon Gold 6252 Processor is quite powerful with 24 cores (48 threads) at 2.10 GHz base frequency, 3.70 GHz max turbo frequency, and 35.75MB of cache, I knew that this new home lab server would need quite a bit of RAM to take advantage of the available horsepower. Additionally, I wanted to ensure that this build included built-in IPMI and remote KVM capabilities. Finally, I had to make sure that the server would have enough PCIe slots and PCIe lanes available to support connecting at least six of the Intel Optane 905P NVMe drives so that I could test a nested VMware vSAN ESA cluster using three nested instances of VMware ESXi with two Intel Optane NVMe devices assigned to each instance. Connecting these drives to the PCIe slots would require PCIe bifurcation support. I’ll discuss this a bit more later.

After receiving the Intel Xeon Gold 6252 Processor and the Intel Optane NVMe SSDs, the first step in this build was to determine which motherboard would meet my requirements. While server/workstation boards are available from manufacturers such as ASRock, ASUS, and Gigabyte, I ultimately chose to go with a Super Micro motherboard due to their reputation for reliability as well as their baseboard management controller (BMC) that supports Redfish APIs, remote KVM, and remote media mounting with no licensing requirements. The board that I selected is the Super Micro X11SPI-TF motherboard. The Super Micro X11SPI-TF motherboard met all of my requirements, including support for the Intel Xeon Gold 6252 Processor and up to 2TB of DDR 4 ECC RAM. Most importantly, it provided one PCIe 3.0 x16 slot, an additional PCIe 3.0 x16 slot that can be either x16 or x8, one or two PCIe x8 slots depending on the configuration of the second x16 slot), and one PCIe 3.0 x4 slot.

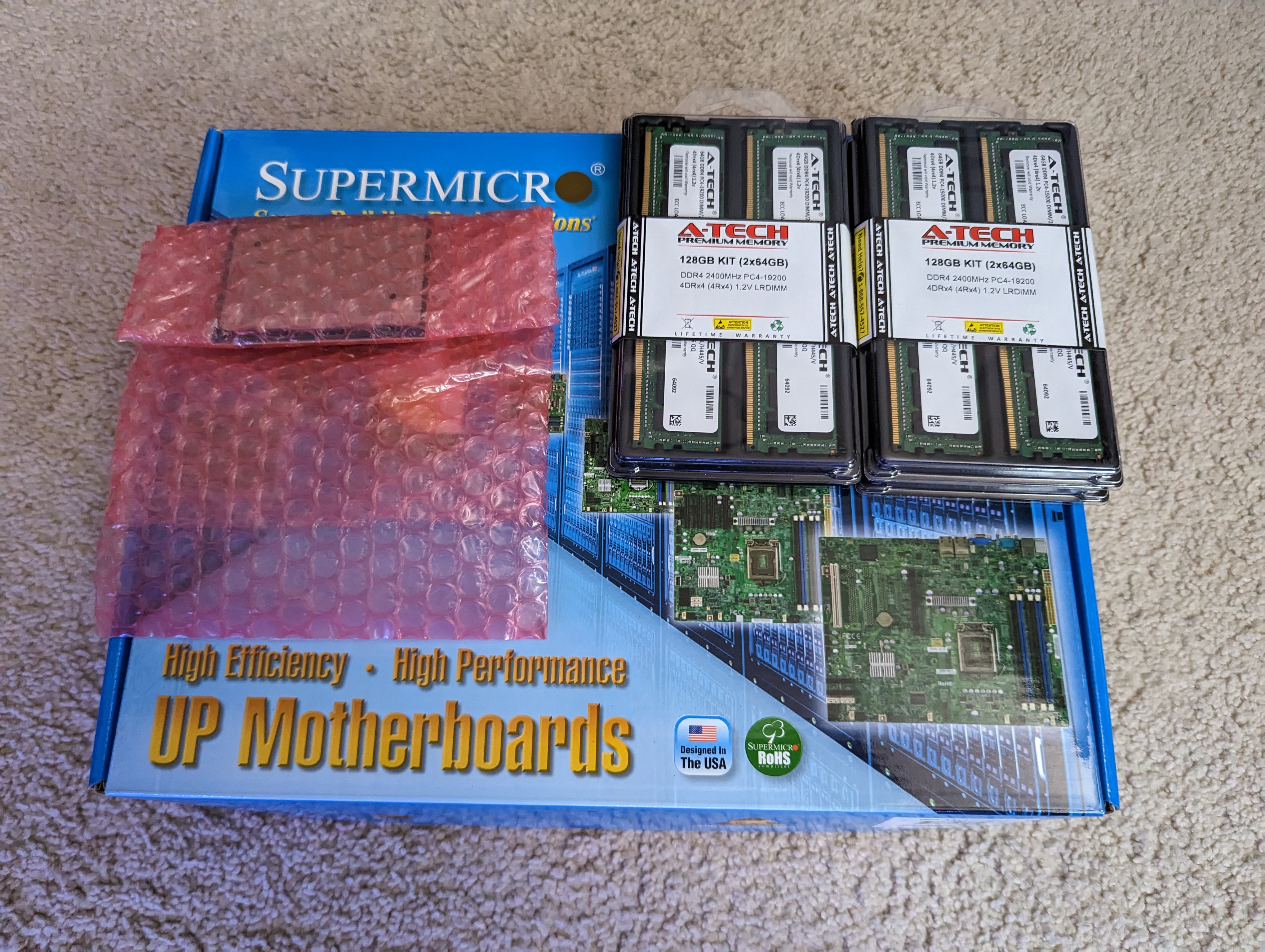

Now that I had decided on a motherboard, I needed to come up with some RAM. Unfortunately, 512GB of DDR4 ECC RAM can be pretty expensive. While the processor is rated for 2,933 MHz DDR4 ECC RAM, I opted for 2,400 MHz DDR4 ECC RAM due to the lower cost compared to the 2,933 MHz. Selecting the slightly slower RAM saved several hundred dollars on this build while accepting a small sacrifice to the system’s overall performance.

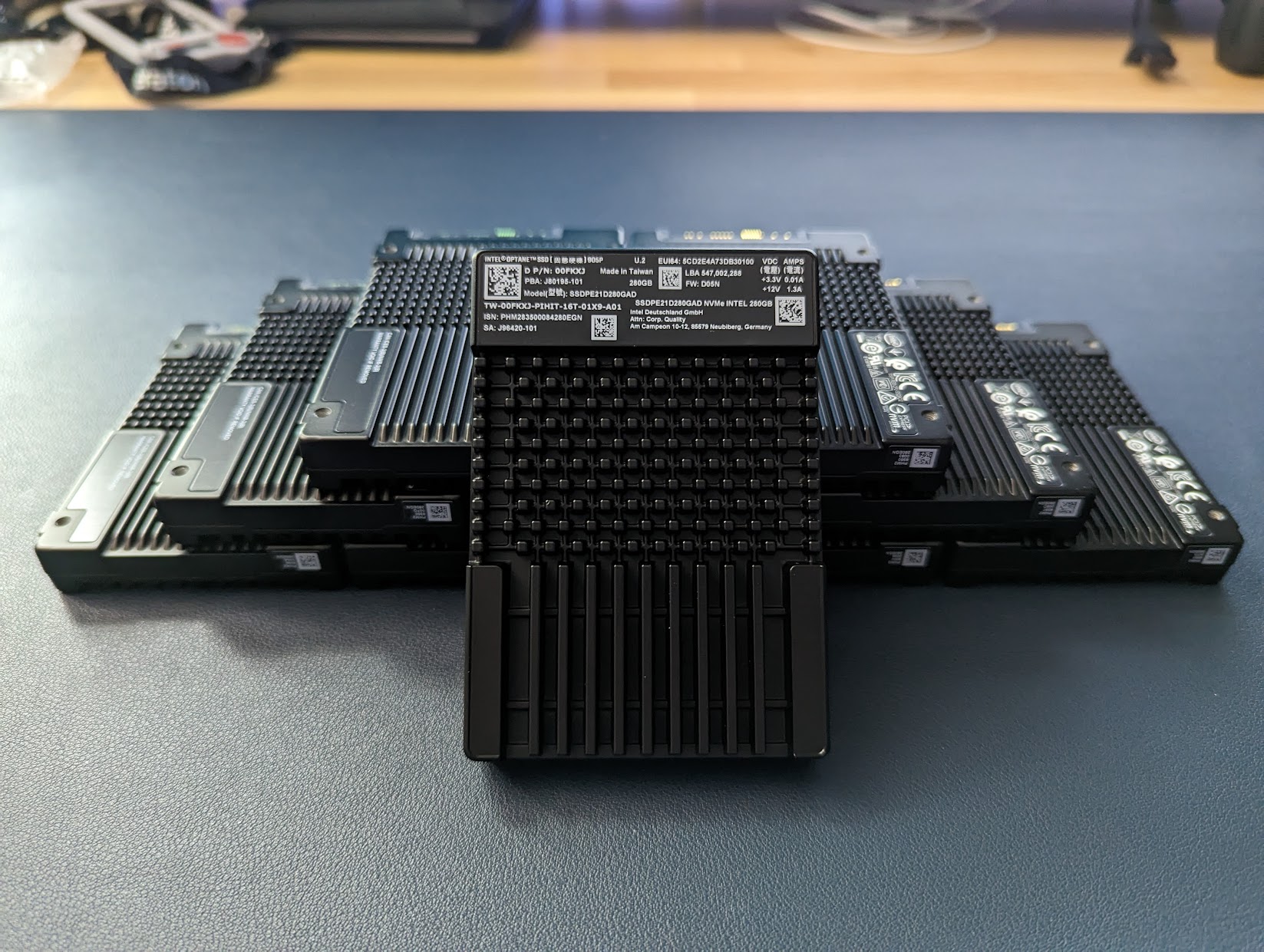

Next up, I needed to select a means of connecting the six Intel Optane 905P NVMe SSDs to the motherboard. The Intel Optane 905P NVMe SSDs I received came in the U.2 form, each requiring four lanes of PCIe 3.0. Using PCI bifurcation, a PCIe 3.0 x16 slot can support connecting up to four of the Intel Optane 905P NVMe SSDs. I decided to test out the 10Gtek PCIe to NVMe Adapter Card for U.2 SSD, X16, (4) SFF-8643 (PN NV9614) and the 10Gtek PCIe to NVMe Adapter Card for U.2 SSD, X8, (2) SFF-8643 (PN NV9612) to connect six of the Intel Optane 905P NVMe SSDs to this new server using one PCIe 3.0 x16 slot and one PCIe 3.0 x8 slot. The 10Gtek adapters require PCIe bifurcation to support separating the x16 and x8 slots into individual x4 slots to connect to each Intel Optane NVMe U.2 SSD. In addition to 10Gtek adapters, I also purchased six Mini SAS SFF-8643 to U.2 SFF-8639 NVMe SSD Cable with 15P SATA Power connectors to connect the Intel Optane 905P NVMe SSDs to the 10Gtek adapters.

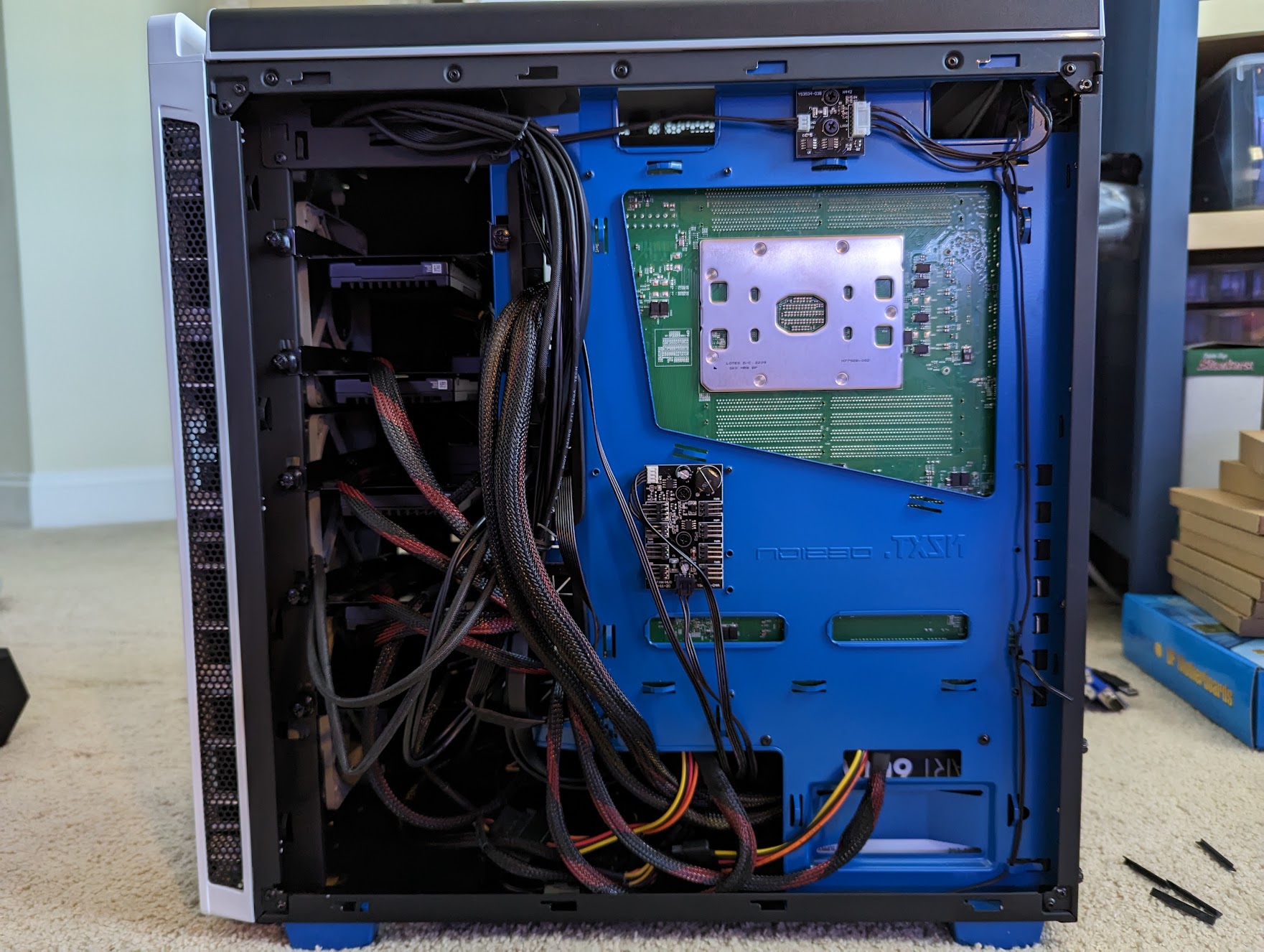

Finally, the remaining items were the case, power supply, and cooling. Luckily, I already had most of these items on hand. I utilized an existing NZXT H440 EnVyUs case because it is excellent at absorbing noise and includes room for numerous eleven 3.5" or eight 2.5" hard drives. In addition to this case, I added multiple Noctua Redux fans and utilized a Super Micro 4U Active CPU Heat Sink Socket LGA3647-0 (SNK-P0070APS4). This configuration of computer case and cooling fans allows the system to run quietly, even with the fans set to their maximum speeds.

Bill of Materials

Below is a table summarizing the bill of materials, including the costs. Because some components were reused from previous builds or were free, such as the ATX case and Intel Xeon processor, I did not include cost values.

As you can see, most of the cost is from the motherboard and the RAM. While I likely could have found a cheaper alternative for the motherboard, such as purchasing a used server/workstation, I opted to buy new hardware to customize the build to my component selections.

Future Testing

In future blog posts, I will provide more information on the configuration of this VMware vSphere ESXi host, details on the nested VMware ESXi configuration, and testing of VMware vSAN ESA using Intel Optane NVMe devices.

See Also

- Unable to Reuse vSAN Disks for New vSAN Cluster -

- Intel Optane NVMe Drives, 10Gtek U.2 Adapters, and PCIe Bifurcation -

- TP-Link JetStream 8-Port 10GE SFP+ L2+ Managed Switch Review -

- DISA Releases VMware vSphere 8.0 STIG Version 1, Release 1 -

- VMware vSphere Virtual Machine Backups using Synology Active Backup for Business -

Search

Get Notified of Future Posts

Recent Posts